May 07, 2024

Publication in the Journal of Finance

What happens, if 164 research teams from 30+ countries independently test the same hypotheses on the same data set? Do they find the same or at least similar results?

The #fincap project aimed to get to the bottom of this question. Coordinated by researchers from Vrije Universiteit Amsterdam, Stockholm School of Economics, and Leopold-Franzens-Universität Innsbruck, 164 research teams (1-2 members each) from 34 countries were provided with a unique data set that was sponsored by Deutsche Börse and consists of 720 million trade records in the EuroStoxx 50 index futures. Over a six-month period, including several rounds of peer-review, the research teams tested six hypotheses on market efficiency and liquidity and submitted a short paper with their results to the #fincap organizers.

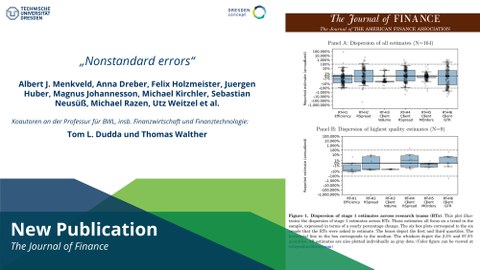

Next to standard errors stemming from estimates in order to test the hypotheses, the outcome shows that a sizeable variation exists in the results across research teams. These “nonstandard” errors stem from different choices researchers take in the evidence-generating process and add uncertainty to the results produced by scientific research papers. The study finds that nonstandard errors decrease with higher-quality and more reproducable research. The peer-review process further reduces nonstandard errors. However, researchers seem to substantially underestimate the size of nonstandard errors.

With Dr. Thomas Walther (Research Fellow), Tom Dudda (PhD Student), and Dr. Tony Klein (alumnus, now Technische Universität Chemnitz) also members of our chair took part as members of research teams in this unique research project. All participants in this project are listed as co-authors on the resulting publication.

The paper is published open access in the latest issue (Vol. 79, No. 3) of the Journal of Finance. More information can be found on the #fincap project website or in this short video trailer.