17.06.2024

Talk"Opacity" by Nelly Y. Pinkrah on June 18th @Uni Kassel workshop: After Explainability: AI Metaphors and Materialisations Beyond Transparency

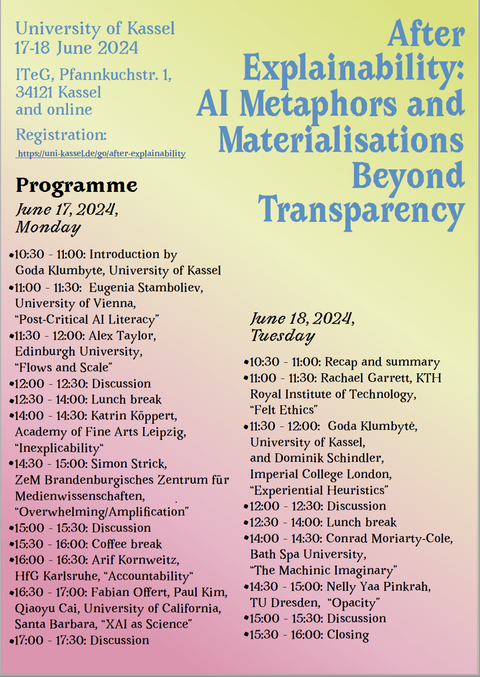

After Explainability: AI Metaphors and Materialisations Beyond Transparency

In the last few decades, explainability and interpretability of AI and machine learning systems have become important societal and political concerns, exemplified through the negative cases of “black box” algorithms. Often predicated on the ideal of transparency of algorithmic decision making (ADM) systems and their mechanisms of inference, explainability and interpretability become features of ADMs to be designed either incrementally during the development process or addressed post-factum through various explanation methods and techniques. These features are also implicitly or explicitly connected to the ethics of algorithms: as the logic goes, more transparent, interpretable, and explainable systems can enable humans who use them to make better decisions and lead to more trustworthy AI applications. In this sense, the capacity for ethical interaction with AI rests on the understandability of such systems, which in turn relies on these systems to be transparent and/or interpretable enough to be explainable.

Even though explainability and transparency of AI can indeed contribute to greater agentiveness on the users’ side, both explainability and transparency in AI are often defined through narrow, technical terms. Additionally, explanations might illuminate how the system generates inferences (such as by demonstrating which variables contribute most to the decision), however, they might not engage explanations of the broader social, political, environmental effects of such systems. Furthermore, explainability and transparency design is often geared towards engineers themselves or direct users of the systems (as opposed to broader audience or those negatively affected) and relies heavily on natural language explanations and visualisations as the main modalities of communication, appealing to universal, disembodied reason as the main form of perception.

While these more conventional research areas are important and valuable, this workshop calls for more exploratory approaches to ethical interactions with/in AI beyond concepts of transparency and explainability, particularly through engaging the rich knowledges in humanities and social sciences. We are especially interested in how the goals of explainability, transparency and ethics could be re-thought in the face of other epistemic traditions, such as feminist, Black, Indigenous, post/de-colonial thought, new materialisms, critical posthumanism, and other critical theoretical perspectives. How concepts such as opacity/poetics of relation (Glissant, Fereira da Silva), embodied/carnal knowledges (Bolt and Barret), affective resonances (Paasonen), reparation (Davis et al.), response-ability (Haraway), friction (Hamraie and Fritsch), holistic futuring (Sloane et al.) and other terms rooted in critical perspectives could generate different articulations of the framework of interpretability/explainability in AI? How might theoretical premises such as relational ethics (Birhane et al.), care ethics (de la Bellacasa), cloud ethics (Amoore) and other conceptual apparati offer alternative ways of engaging in interactions with ADM systems? Participants are welcome from a diverse spectrum of disciplines, including, media studies, philosophy, history of technology, social sciences and humanities more broadly, as well as arts, design, and machine learning.