Image-based interpretation of point clouds in CAD software environment

Project title

Automated referencing between point clouds and digital images

Funding

This project was funded by resources of the European Fund of Regional Development (EFRE) and by resources of the State Saxony.

Motivation

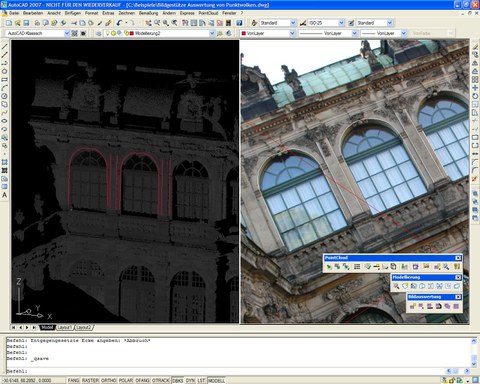

Photogrammetric 3D object reconstruction can be obtained from images or laser scanner data. Because of their complementary characteristics, these data are suitable for a combined interpretation. Terrestrial laser scanner point clouds provide accurate and reliable 3D information of scanned objects. The high geometric resolution and the high visual quality of images can support the reconstruction of 3D features from these point clouds. In this context, a new option for 3D object reconstruction appears: 3D geometries can be obtained by monoplotting-like procedures, mapping in monocular images and deriving depth information automatically from the laser scanner data (Figure 1). The prerequisite for the integrated use of images and point clouds for measurement and interpretation processes is a correct geometric referencing of the data which is the subject of this project.

Image-based interpretation of point clouds in the software PointCloud provided by the kubit GmbH

Tasks and purpose of the project

The purpose of the project is the development of methods for an efficient, accurate and reliable geometric referencing of terrestrial laser scanner data and photos taken independently from the laser scanner. Thus, the determination of the exterior orientation of a single image can be realised measuring corresponding point and/or straight line features in the image and a point cloud of the same scene. Because many users (for example archaeologists, architects, monument conservators) will take the images with digital amateur cameras, a simultaneous calibration of the camera should be possible by determining the interior orientation and the lens distortion for each image. The following subtasks shall be considered in the project:

- Geometric referencing with interactively measured point features

- Geometric referencing with interactively measured line features

- Automatic extraction of linear features from images

- Automatic extraction of linear features from point clouds

- Automatic feature matching

Referencing with interactively measured point and line features

Principle

The orientation of a single image primarily contains the determination of the exterior orientation. That includes the orientation angles and the position of the perspective centre in relation to the reference system of the laser scanner point cloud. The determination of the orientation parameters is realised indirectly by measuring imaged object features in the photo. If these features are control points with known coordinates in the image and in the object reference system given by the laser scanner data, the image orientation can be realised by a classical single photo resection.

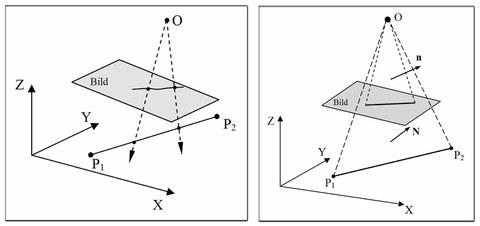

As a consequence of the sub-sampling characteristics of laser scanner data, distinctive points are not always well defined in 3D point clouds. This effect grows with decreasing scan resolution and/or increasing distance from the object. To overcome this, we switch to methods working with straight lines. In the line photogrammetry two principle approaches can be distinguished: the collinearity and the coplanarity approach (Figure 2). While the first one is based on the collinearity equations, the second one is based on the assumption that the image line, the object line and the perspective centre are coplanar.

Approaches for line-based image orientation - collinearity (left) and coplanarity approach (right)

In a developer version of the software PointCloud, the point-based as well as three line-based referencing methods were implemented. The line-based methods of (Schenk, 2004) and (Schwermann, 1995) are based on the collinearity equations whereas the approach of (Tommaselli & Tozzi, 1996) uses the coplanarity condition.

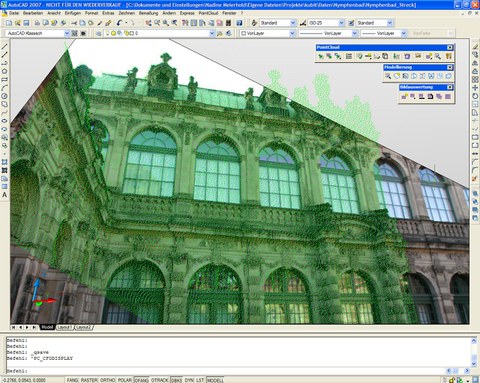

In PointCloud referenced image overlaid with the corresponding laser scanner point cloud (Object: Nymphenbad in Dresden)

Results

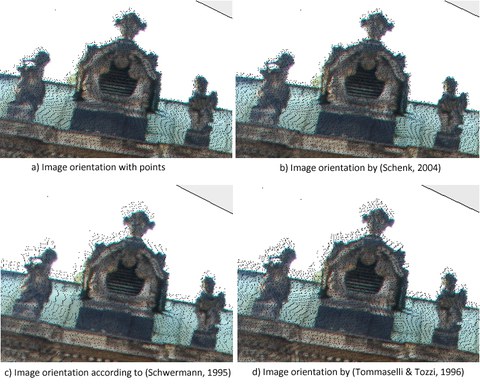

Using lines rather than discrete points may be a valuable option for the orientation of single digital images to laser scanner 3D point clouds. The results show that, compared to discrete points, line features are equally well or even better suited for the referencing between images and laser scanner point clouds (Figure 4). This fact is pronounced with an increasing point spacing assuming that the object lines are modelled in the point cloud (e.g. by intersection of fitted planes).

Oriented images applying the different methods overlaid with the laser scanner point cloud

Accuracy-wise, a gradation of the several line-based methods is obvious. The point-to-line resection of (Schenk, 2004) performs better than the line-to-line approach according to (Schwermann, 1995). The model of the applied coplanarity approach (Tommaselli & Tozzi, 1996) yields the poorest accuracy and, due to the lack of parameters for the interior orientation of the camera, it is not suited for a self calibration.

Related PublicationS

Projekt partner

The research work was in cooperation with the kubit GmbH in Dresden.

Contact

- Prof. Dr. habil. Hans-Gerd Maas (project management)

- Dipl.-Ing. Nadine Stelling, Dipl.-Ing. Anne Bienert (project work)