Ausgewählte bibliometrische Indikatoren

Grob vereinfacht misst Bibliometrie den Einfluss einer Person (autorenbezogene Indikatoren), eines Werkes (publikationsbezogene Indikatoren) oder einer Fachzeitschrift (zeitschriftenbezogene Indikatoren) auf die Wissenschaft. Alle Indikatoren basieren im wesentlichen auf Maßzahlen des Publikationsoutputs (z.B. Publikationsanzahl) bzw. auf Maßzahlen für die Wahrnehmung durch die Wissenschaftsgemeinde (z.B. Zitationsanzahl).

Neuere Indikatoren beziehen Soziale Medien (Altmetrics), die zitierten Referenzen (Maß der Disruptivität), den Kontext, die Funktion und die Wertigkeit der Zitationen (Citation Content / Context analysis) in die Analyse mit ein.

Auf dieser Seite wird eine Auswahl an Indikatoren vorgestellt, kurz diskutiert und auf weiterführende Literatur verwiesen.

Inhaltsverzeichnis

Autorenbezogene Indikatoren

Die Zählung von Publikationen und Zitationen sowie deren Auswertung erfolgt auf Autorenbasis.

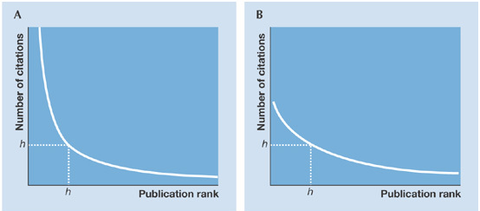

Hirsch-Index (h-Index)

Anzahl h Publikationen, welche mindestens h Zitationen aufweisen (Bsp. h=7 bedeutet, dass die Person 7 Publikationen veröffentlicht hat hat, welche min. 7mal zitiert wurden).

Wie erklärt sich die extreme Popularität dieser Maßzahl?

- (vergleichsweise) leicht berechen- und vergleichbar

- vereint Maß der Produktivität (Publikationsanzahl) mit Maß der Resonanz (Zitationen)

- robust gegenüber wenigen hochzitierten sowie zahlreichen gering zitierten Publikationen

Kritik

- Verzerrung durch wissenschaftliches Alter

- Unempfindlichkeit gegenüber Schwankungen in der Wahrnehmung von

Forschungsleistungen und der Produktivität eines Forschenden (Index fällt nicht) - keine Aussage über Verteilung der Zitationen auf Publikationen, ausführlich vgl. Bornmann / Daniel (2009), DOI: 10.1038/embor.2008.233

Ausgehend von der Kritik wurde die Methode weiterentwickelt z.B. annual h-index, g-Index, Google Scholar's i10-Index.

Forschende können durch die Nutzung einer ID z.B. ORCID dazu beitragen, dass Publikationen / Zitationen korrekt zugeordnet werden und sich ihre Sichtbarkeit erhöht. Bei Scopus wird jedem erfassten Autor automatisch eine ID zugeordnet. Einsicht und Korrekturwünsche sind über das SupportCenter möglich.

Publikationsbezogene Indikatoren

Die Zählung sowie Auswertung der Zitationen bzw. Literaturverweise findet auf Ebene der Publikation statt. Neben der reinen Zählung der Zitationen kann durch Berücksichtigung des Zitationsverhaltens der Fachdisziplin die Aussagekraft erhöht werden. Diese sogenannte (Fach-)Normalisierung orientiert sich gewöhnlich entweder am jeweiligen Durchschnitt der Zitationen oder an häufig zitierten Publikationen/Zeitschriften ("Higly cited"-labels).

Normalisierte Indikatoren

- auf Durchschnittsbasis: Field Normalized Citation Impact (FNCI)

- dabei bedeutet FNCI < 1 unter dem Durchschnitt ähnlicher Publikationen, z.B. 0,85 impliziert 15% seltener zitiert als vergleichbare Publikationen

- FNCI >1 bedeutet über dem Durchschnitt ähnlicher Publikationen, z.B. 1,50 impliziert 50% häufiger zitiert als vergleichbare Publikationen

- orientiert sich an häufig zitierten Publikationen: Top1%, Top5% oder Top10% der meist zitierten Publikationen eines Fachbereichs und Zeitraums

- orientiert sich an häufig zitierten Zeitschriften: publiziert in Top1%, Top5% oder Top10% der meist zitierten Zeitschriften eines Fachbereichs und Zeitraums

Kritik und alternative Ansätze

Dieses Konzept der Normalisierung steht und fällt mit der Fachabgrenzung, daher ist die Aussagekraft dieser Indikatoren insbesondere bei interdisziplinären Publikationen weniger geeignet.

Alternative Metriken (Altmetrics)

Diese können sehr nützlich sein, um die Wirkung einer Publikation außerhalb der Wissenschaftsgemeinde zu erfassen. Dabei wird vor allem die Wirkung in den sozialen Medien (Blogs, Twitter, Facebook, Youtube etc.) erfasst.

Ein typisches Beispiel ist die Altmetric Attention Score.

Bei der Betrachtung dieser Metriken sollte man sich bewusst sein, dass diese die öffentliche Wahrnehmung der Netzgemeinde wiederspiegeln und damit einer starke Verzerrung hin zu Trendthemen bzw. leicht erklärbaren Themen unterliegen. Des Weiteren ist die Manipulationsanfälligkeit deutlich höher als bei herkömmlichen Metriken.

Zeitschriftenbezogene Indikatoren

Diese Klasse an Indikatoren soll die Bedeutung einzelner Zeitschriften erfassen.

Impact Factor

Eine Möglichkeit besteht darin die Zitationen aller Artikel der Zeitschrift eines Jahres zu der Anzahl der Artikel im gleichen Jahr ins Verhältnis gesetzt. Nach diesem Prinzip funktioniert der älteste und bekannste Indikator dieser Klasse, der Impact Factor.

Kritik

- Aufgrund der Schwankungsanfälligkeit bei Jahresbetrachtungen gibt es diesen auch für 2 und 5-Jahreszeiträume.

- keine Berücksichtigung fachdisziplinbedingter Unterschiede im Zitierverhalten

- durchschnittliche Anzahl der Zitate der Publikationen in einer Zeitschrift wird hauptsächlich durch einen kleinen Anteil hoch zitierter Publikationen bestimmt, ein Rückschluss vom Zitationsimpact einer Zeitschrift ist somit nicht repräsentativ für den Einfluss einer Publikation in dieser Zeitschrift

Normalisierte Indikatoren

Das Prinzip ist ähnlich dem Impact Factor allerdings werden zusätzlich Zitate in bedeutenden Zeitschriften höher gewichtet und mit Hilfe des so ermittelteten Wertes ein Ranking innerhalb der Fachdisziplin gebildet. Der Rangwert innerhalb der Fachdisziplin ist nun der Indikator für die Bedeutung der Zeitschrift. Dabei werden im wesentlichen zwei Herangehensweisen angewendet.

Citing-Side Normalization

Dieser alternative Normalisierungsansatz korrigiert für die Länge des Literaturverzeichnis. Die Idee beruht auf der Annahme, dass die Unterschiede in der Zitationsdichte zwischen den Fachbereichen zu einem großen Teil darauf zurückzuführen sind, dass Publikationen in einigen Fachbereichen tendenziell längere Referenzlisten haben als in anderen Fachbereichenbereichen. Ein Indikator, der auf diesem Konzept basiert ist der SNIP.

Recursive Citation Impact Indicators

Ein weiterer Normalisierungsansatz, für sogenannte Recursive Citation Impact Indicators gewichtet den Wert der Zitation in Abhängigkeit von der Quelle, in der sie veröffentlicht wurde. Die Idee dahinter ist, dass ein Zitat z.B. in Nature oder Science höher gewichtet werden sollte als ein Zitat in einer weniger renommierten Zeitschrift. Typische Beispiele hierfür sind der SJR und der Eigenfactor.

Kritik

Auch hier gilt, dass ein Rückschluss vom Zitationsimpact einer Zeitschrift nicht repräsentativ für den Einfluss einer einzelnen Publikation in dieser Zeitschrift ist (s.o.).

Weiterführende Literatur

Folgende Links führen sie zu ausführlichen Erläuterungen einschließlich kritischer Diskussion der bei InCites bzw. Scopus verwendeten Indikatoren.

Einen ausgezeichneten Überblick sowie eine kritische Diskussion bibliometrischer Indikatoren bietet Waltman (2016).

Einen Schnelleinstieg in gängige bibliometrische Indikatoren liefert der Metrics Toolkit.