Modeling the multimodal interaction

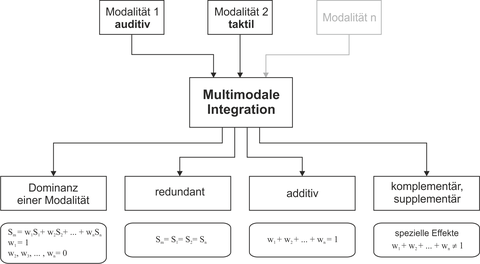

If the human brain is offered multisensory event types that originate from an event source, then an unified perceptual process takes place during which processing operations occur. The multisensory object of perception can always be understood as a "construction" of the brain, which is by no means arbitrary, but based on a weighted combination of multisensory signals (see figure). During the course of the multimodal formation of the perceived, there is always an interaction between auditory and tactile motivated events. For example, the combination of two modalities in a multi-modal perceptual object can result in either a weak, a strong, or a wholly qualitatively new percept (the result of a perceptual process). With this background, the following questions must be clarified: How does our brain weight the propositions that come from different senses to form a final perceptual object? In other words, what are the relative contributions of different sensory modalities to a multimodal percept? Can a percept, which is based solely on a modality influenced by a simultaneous offer of another sensory modality?

Contact

Mitarbeiter

NameMr Dr.-Ing. Sebastian Merchel

Send encrypted email via the SecureMail portal (for TUD external users only).

Professur für Akustik und Haptik

Professur für Akustik und Haptik

Besucheradresse:

Barkhausenbau, Raum BAR 59 Helmholtzstraße 18

01062 Dresden

None