Dynascape

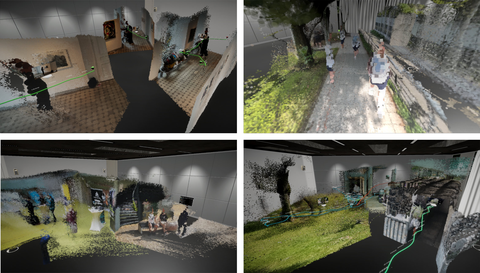

At IXLAB, we explore technological possibilities for the capturing and playback of real-world dynamic scenes such as a busy street or crowded marketplace immersively in virtual reality.

We utilize capturing techniques based on cutting-edge mobile sensors and record the dynamic scene into a single portable spatially tracked RGB-D video. Such video is designed to be an informative representation of the scene being captured and contains camera movements and depth frames along with traditional color frames.

At IXLAB we have developed Dynascape, an open-source toolkit for authoring and immersive playback dynamic digital scapes. Within Dynascape, we developed a suite of tools for immersive exploration of the spatially tracked RGB-D video dataset in mixed reality. Our tool supports editing and compositing functions. For editing, we proposed immersive widgets supporting the spatial, temporal, as well as appearance editing functions. For compositing, we provide functions to let users composite multiple tracked RGB-D videos in space.

Publications

- Zhongyuan Yu, Daniel Zeidler, Victor Victor, and Matthew Mcginity. 2023. Dynascape : Immersive Authoring of Real-World Dynamic Scenes with Spatially Tracked RGB-D Videos. In Proceedings of the 29th ACM Symposium on Virtual Reality Software and Technology (VRST '23). Association for Computing Machinery, New York, NY, USA, Article 10, 1–12. https://dl.acm.org/doi/10.1145/3611659.3615718 [code]

Videos

Dynascape Supplemental Material: [video]

Demo Scenes

Dynascape supports authoring and viewing in both mixed reality and virtual reality. The author can place recordings directly in the location where they are intended to be viewed. The toolkit is readily applicable in fields like gaming, virtual tourism, digital art, and more.

Get in touch

Curious? Interested? Want to learn more or experience it yourself? Please send us a message: Matthew McGinity, Zhongyuan Yu