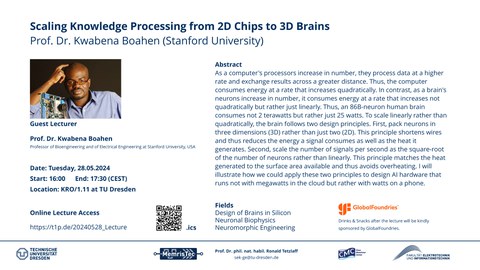

May 28, 2024; Talk

Scaling Knowledge Processing from 2D Chips to 3D Brains - Prof. Dr. Kwabena Boahen-Stanford University

Professor of Bioengineering and of Electrical Engineering at Stanford University, USA

Abstract

As a computer's processors increase in number, they process data at a higher rate and exchange results across a greater distance. Thus, the computer consumes energy at a rate that increases quadratically. In contrast, as a brain's neurons increase in number, it consumes energy at a rate that increases not quadratically but rather just linearly. Thus, an 86B-neuron human brain consumes not 2 terawatts but rather just 25 watts. To scale linearly rather than quadratically, the brain follows two design principles. First, pack neurons in three dimensions (3D) rather than just two (2D). This principle shortens wires and thus reduces the energy a signal consumes as well as the heat it generates. Second, scale the number of signals per second as the square-root of the number of neurons rather than linearly. This principle matches the heat generated to the surface area available and thus avoids overheating. I will illustrate how we could apply these two principles to design AI hardware that runs not with megawatts in the cloud but rather with watts on a phone.