Themen für Abschlussarbeiten

The Immersive Experience Lab offers a range of thesis topics and research project opportunities concerning immersive media, for both Bachelor, Master, and Diploma students.

For all projects, you will be provided with the necessary infrastructure: VR or AR head-mounted displays, computers, tracking systems etc.

If any of the topics interest you, or you would like to propose your own topic, follow the instructions outlined here: Writing a thesis with IXLAB

NOTE: Theses on self-proposed topics are no longer accepted this semester. Please reach out directly to the respective contact person if you are interested in a topic from the provided list.

Die Themen sind nur in englischer Sprache vorgestellt (s.h. auch die englischen Seiten). Eine Betreuung und/oder Bearbeitung in deutsch ist zu erfragen!

Immersive analytics is the use of immersive media to aid the analysis of complex data [1, 2]. It builds on the hypothesis that spatial visualisation and interaction possibilities of VR or AR can aid or accelerate the analysis of data.

The objective of this project is to develop immersive visualizations and interactions that incorporate aggregated or extracted data alongside the raw data, as exemplified in [3]. The aim is to reduce visual clutter and enhance the overall legibility of the information. As a point of reference, a design space will be provided to guide the implementation. An open-source visualization toolkit is considered a potential outcome of this project.

This topic is applicable to both research projects and master/bachelor theses. Please contact Zhongyuan Yu () for more information.

[1] https://dl.acm.org/doi/10.1145/3544548.3580715

[2] https://doi.org/10.1007/978-3-030-01388-2

[3] https://doi.org/10.3389/frobt.2019.00082

When creating immersive experiences, virtual elements are commonly with desktop software on 2D screens. However, for mixed reality applications designed to be situated in specific physical environments, certain steps in the creation process can be better performed in situ, using mixed reality to directly author content in the place it will be viewed. In this project, you will design and prototype an in situ authoring system that helps to manage virtual content directly in mixed reality space.

Prerequisites: Familiarity with Unity and 3D graphics very helpful.

This topic is applicable to both research projects and master/bachelor theses. Please contact Zhongyuan Yu () for more information.

Research Project / Bachelor Thesis / Master Thesis

Project Type: Applied / Technological

At IXLAB we have developed a multi-user mixed reality system that allows groups of people to explore large-scale structures without the constraints of cables or tracking systems [1]. In this project you will explore the challenges and opportunities of using the system for classroom scenarios. Two approaches may be taken:

The first is to consider the features and requirements of a general purpose MR immersive presentation system. In other words, Powerpoint for multi-user mixed reality. What features would it need? What does an "immersive lecture" in multi-user mixed reality look like?

The second is to consider a specific educational context: for example a specific lesson in geography, biology, music, chemistry or anatomy, for example. This approach is well-suited for collaboration with educators or instructors from various domains. IXLAB will support the identification of potential use cases / partners for the application. Students are also encouraged to come up with their own collaboration with instructors in any field.

Requirements: Proficiency with Unity or 3D modelling skills helpful.

Please contact: Krishnan Chandran ()

[1] F. Schier, D. Zeidler, K. Chandran, Z. Yu and M. McGinity, "ViewR: Architectural-Scale Multi-User Mixed Reality with Mobile Head-Mounted Displays," in IEEE Transactions on Visualization and Computer Graphics, https://doi.org/10.1109/TVCG.2023.3299781

Research Project / Bachelor Thesis / Master Thesis

IXLAB team has developed a video conferencing system that can be a creative alternative to traditional solutions like Zoom. Our software allows the placement of participants, content, environments and other virtual artefacts in a game-like environment.

This project will explore the artistic possibilities of the above-mentioned software. The student will work towards the development of an applied use case starting from the building blocks provided by the lab.

Additionally, we will also address questions like, how the virtual space and the bodies influence our social behaviour online. We will explore how concepts of "space", "movement", "place", "togetherness" and "presence" emerge from different types of simulations and systems.

The student will use our infrastructure to develop an applied or artistic use case of the software and evaluate it. IXLAB will support the identification of potential usecases / partners for the application.

Contact:

Krishnan Chandran / Daniel Zeidler

In the project VRAD, we develop multi-user mixed reality exposure therapy treatments for anxiety disorders. During exposure, we sometime use biosignals such as heart and breathing rate, eye movements and skin conductance to provide real-time feedback on the patients state of arousal and level of fear during mixed reality exposure therapy. These are potentially helpful tools for the psychotherapist, as they can indicate whether the patient is employing protective behaviours that may lead to ineffective treatment. Furthermore, they can be helpful for evaluating sessions and progress over time, and comparing physiological levels of fear to the expected fear levels when exposed to a certain situation or experience.

In this project you explore methods for visualising the biosignals inside mixed reality. You will design and develop prototypes and assess their effectiveness in terms of readibility, cognitive load and ease-of-use, intrusiveness and other factors.

Please contact us for more info.

Contact: Florian Schier / Daniel Badeja

In this project, we will create a persistent augmented reality world, populated with a rich ecosystem of virtual autonomous agents and creatures. Our target location will be the giant atrium at the heart of the Informatik Andreas-Pfitzmann-Bau (although other locations are possible). The goal is to bring this space to life with a real-time simulated ecosystem that can evolve and grow over time, reacting to real-world factors such as the movements of people, sounds, lights and shadows.

Users see this world through virtual or augmented reality displays, but importantly, even when thee virtual world is persistent - it "exists" even when people are not watching!

The project contains two subprojects that can be tackled independently or together:

- Measuring and tracking the movements of people, changes in light, sounds in the real world and bringing them into the virtual world.

- Developing a evolutionary ecosystem of evolving creatures and agents capable of producing interesting behaviours and morphologies.

Prerequisites: interest in agent-based learning or evolutionary algorithms.

Contact: Victor <victor.victor@tu-dresden.de>

[1] F. Schier, D. Zeidler, K. Chandran, Z. Yu and M. McGinity, "ViewR: Architectural-Scale Multi-User Mixed Reality with Mobile Head-Mounted Displays," in IEEE Transactions on Visualization and Computer Graphics, https://doi.org/10.1109/TVCG.2023.3299781

In this project we explore the use of immersive spatial interfaces for the control and exploration of generative AI image systems. In particular, we investigate the use of large mixed reality spaces to aid in the exploration and curation of generative images.

This project can take a number of forms. For example, you might develop a mixed-reality system to accelerate the search for the "desired" image. The system might provide tools for curating collections and laying out large constellations of images in space, and providing spatial interfaces for operations such as variation and mutation, interpolation, in or out-painting. The creation of images might be visualised as a branching trajectory through latent space.

Alternatively, you might develop a mixed-reality tool for conducting "random walks" through the space of possiblities. A never-ending maze of galleries and corridors decorated or built from images. Add to this generative text, and we can begin to imagine a VR version of Louis Borges' "Library of Babel".

Contact: Florian Schier

Research Project / Bachelor or Master Thesis

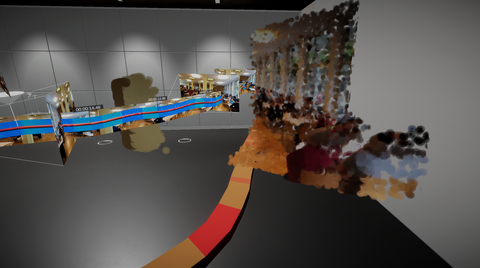

At IXLAB we have developed a multi-user mixed reality system that allows people to explore large-scale structures without the constraints of wires or tracking systems [1]. With this system, large architectural spaces such as the atrium of the APB can be transformed into immersive visualisation arenas.

In this project you will explore the opportunities and challenges of using the system for visualisation of data in large arenas, such as the APB atrium. What new advantages or use-cases emerge when visualisating data at architectural scales? Can the architectural affordances, such as stairways, mezzanines, elevators, be exploited? What kind of interaction modalities are needed to deal with the great distances and range of scales?

You will begin with the IXLAB mixed reality template, select some suitable datasets and then explore the design space of multi-user AR visualisation over large scales.

[1] F. Schier, D. Zeidler, K. Chandran, Z. Yu and M. McGinity, "ViewR: Architectural-Scale Multi-User Mixed Reality with Mobile Head-Mounted Displays," in IEEE Transactions on Visualization and Computer Graphics, https://doi.org/10.1109/TVCG.2023.3299781

Contact: Zhongyuan Yu

Research Project / Thesis

At IXLAB we have developed a multi-user mixed reality system that allows people to explore large-scale structures without the constraints of wires or tracking systems [1]. In this project you will explore the challenges and opportunities of using the system for architectural structures. This includes addressing such topics as:

- Mixing real and virtual architectural spaces

- Group navigation in MR

- Achieving accurate light simulation on low-powered mobile HMD

- Acoustics simulation

[1] F. Schier, D. Zeidler, K. Chandran, Z. Yu and M. McGinity, "ViewR: Architectural-Scale Multi-User Mixed Reality with Mobile Head-Mounted Displays," in IEEE Transactions on Visualization and Computer Graphics, https://doi.org/10.1109/TVCG.2023.3299781

Contact: Krishnan Chandran

Sonic augmented reality is medium in which artificial sounds are perceived as if emanating from the real environment. Ideally, the sounds blend indistiguishably with the real world and are perceived with all the spatial and acoustic properties that accompany hearing in the real world.

A common method of achieving this it to track the position and orientation of the users’ head and then modify a sound according to the spatial relationships between the listener, the sound source and the acoustic environment. The results depend greatly on the specific algorithms employed, which can vary from simple simulation of inter-aural differences to more complex wave-based simulations of the acoustic environment.

In this project we will construct a sonic augmented reality laboratory. Combining a spatial tracking system with headphones, binaural microphones and an array of loudspeakers, it will allow direct comparison of both real and virtual sonic events, and direct comparison of different rendering algorithms.

Contact

Krishnan Chandran

krishnan.chandran@tu-dresden.de

Research Project

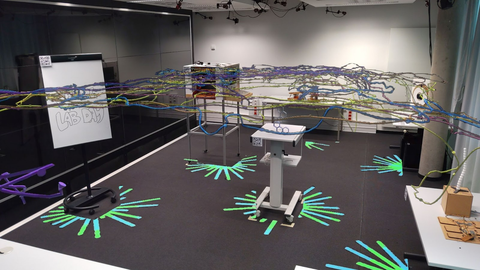

Sonic structures is a large project exploring the idea of "4E sound and music" - sonic experience as fundamentally embodied, enactive and embedded and extended through space.

(For more info, see https://tu-dresden.de/ing/informatik/smt/im/ixlab/xim2022)

We are developing a multi-user mixed reality system that allows real-time transformation of sound and music into visual structures. To do this, we require methods for analysing sound and music and extracting meaningful data.

In this project you will develop tools for real-time audio feature analysis in Unity.

Previous experience with audio signal processing is helpful.

How might the interface between human and vehicle be improved or entirely reimagined? In this project, we use immersive simulation to explore novel and experimental ways of controlling a vehicle. This includes exploring novel methods for using information arriving from car-mounted or remote sensors or from intelligent systems.

In this project we will develop a virtual reality driving simulator, and then use this simulation to explore different human-vehicle interaction paradigms.

Contact: Prof. Matthew McGinity