Pointcloud VR

3D scans of real objects, structures, and scenes are increasingly being employed in fields ranging from building virtual environments to training robots or autonomous cars to archaeology, museums, surveying and forensics, remote sensing, and the creation of 3D assets for computer games and art. Current methods for capturing real-world structures tend to produce dense, unstructured 3D point clouds which, before they can be used in their intended application, must often be classified into different subsets. Given the complex nature of the data and the human-centered feature of the tasks, there is a need to develop user-friendly interactive tools for users to explore the data set.

In this research direction, we develop immersive systems and interfaces to support the immersive exploration of the captured raw 3D point cloud data including immersive labeling, editing, morphing, annotating, as well as analyzing.

Pointcloud Labeling

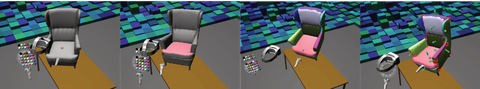

Together with the CGV group, IXLAB developed a virtual reality toolkit for labeling real-world captured massive point cloud data.

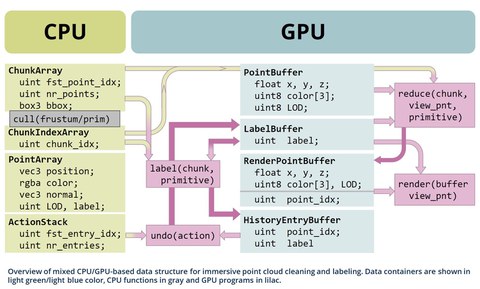

We propose immersive labeling interfaces and the underlying mixed CPU/GPU-based data structure that permits continuous level-of-detail rendering (CLOD rendering) and rapid selection, translation, and removal of points with instantaneous visual feedback. Our method deploys a chunk-based compute shader selection algorithm fostering fast spatial queries and modification of point labels.

Pointcloud Structuring

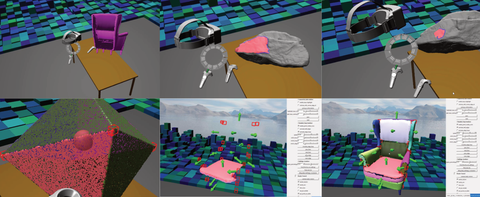

Beyond labeling, we also developed a VR toolkit for extracting structural information from scanned unorganized point clouds. This includes tools enhancing the immersive observation of point cloud data, filtering noisy points, extracting geometric information from point clouds, interactive error correction, as well as connectivity information extraction.

Publications

Tianfang Lin, Zhongyuan Yu, Nico Volkens, Matthew McGinity and Stefan Gumhold, "An Immersive Labeling Method for Large Point Clouds," 2023 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Shanghai, China, 2023, pp. 829-830, doi: 10.1109/VRW58643.2023.00257.

Zhongyuan Yu, Tianfang Lin, Stefan Gumhold and Matthew McGinity, "An Immersive Method for Extracting Structural Information from Unorganized Point Clouds," 2024 Nicograph International (NicoInt), Hachioji, Japan, 2024, pp. 24-31, doi: 10.1109/NICOInt62634.2024.00014.

Get in touch

Curious? Interested? Want to learn more or experience it yourself? Please send us a message: Matthew McGinity, Zhongyuan Yu