Learning the language of the human genome

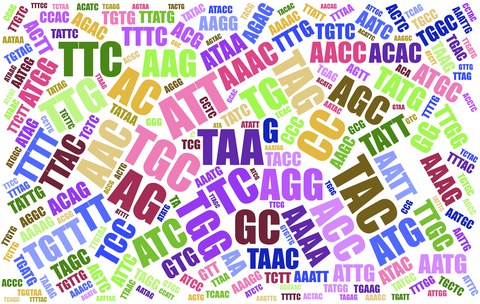

Recent advances in natural language processing algorithms and transformer use in deep learning suggest that genomics data types will greatly benefit from such technologies. We therefore started to develop large language models using the human genome itself as text, otherwise following the training strategies of GPT-3. Analogous to models of actual language, fine tuning tasks will allow us to address specific questions, such as classification of genome elements, prediction of gene expression, mutation rates, etc.

We expect to verify much knowledge on gene regulation with this approach, and also expect novel findings in the areas of repeat-functionality and encoding of stability, consequences of genetic diversity, and differences in genome organization when training our algorithms on different species.

In addition, these models harbor the potential to strongly improve data analysis of multi-omics data through imputation of higher sequencing depth, increasing resolution, and fine tuning tasks to extract information.

The first preprints from this project can be found here:

https://www.biorxiv.org/content/10.1101/2023.07.11.548593v1

https://www.biorxiv.org/content/10.1101/2023.07.19.549677v1

If you are interested in the revised manuscripts, please get in touch.

The GROVER Model can be found on Huggingface

The project is currently supprted through a Maria Reiche Postdoctoral Fellowship and a Marie Curie fellowship to Melissa Sanabria.

Image: "Words" of the SARS-CoV2 genome