Analysis of 3D point cloud surveillance for an effective risk mitigation on airport aprons

PROJECT INFORMATION

Contracting entity:DFG - German Research Association

Project term: 41 month, October 2013 - February 2017

MOTIVATION AND OBJECTIVES

After the world`s first Remote Tower Service (RTS) became operational in 2015 in Sweden and with a continuing implementation of the International Civil Aviation Organization`s (ICAO) A‑SMGCS concept, the need for real-time generation of highly precise, error-free and robust sensor data has become far stronger than ever before. The availability of data capturing the traffic situation and the operating conditions on the movement area is deemed essential for today`s airport surveillance system and for a more automated airport surveillance in the future.

In contrast to this development the current system of airport ground surveillance still largely relies on the controller`s Out-The-Window-View (OTWV), partly supported by video cameras and Surface Movement Radar (SMR). These information sources contribute to the controller`s situational awareness (SA), which is defined as “perception of the elements in the environment within a volume of time and space, the comprehension of their meaning and the projection of their status in the near future”. The first level of Endsley`s three-stage model of SA tackles the controller`s “picture” set up, comprising “[…] the status, attributes, and dynamics of relevant elements in the environment”. Consequently, this level deems to have a high impact onto safety as missing or incorrect information can potentially lead to reduced “comprehension of the current situation” (level 2) and/or limited “projection of future states” (level 3).

Some task analyses and a large number of legacy operational control procedures requiring a direct visual contact to an object underline the crucial role of the OTWV for building and maintaining the controller`s picture. Even the aforementioned RTS concepts and their practical implementations continue to stick to the paradigm of the (artificially reproduced) OTWV.

The OTWV, however, is apparently greatly dependent from weather/lighting conditions (e.g. fog, precipitation, darkness) and obstacles in the line-of-sight. In situations where such view-obstructing factors are present, a reduction in the amount of handled traffic to compensate for the insufficient controller`s picture will most likely occur, e.g. due to the application of Low Visibility Operations in Air Traffic Control. A more critical case arises if this insufficient picture leads to a reduced ability to recognize conflicts and to poor decision making. In this context, Jones & Endsley found that 72.4% of all safety-relevant occurrences from the Aviation Safety Reporting System (ASRS) constitute situational awareness level 1 failures („Fail to perceive information or misperception of information“) of the Air Traffic Controller (ATCO).

Various risk analyses underline the need for action to mitigate the current risks of incidents and accidents on airport movement areas for both the maneuvering area (e.g. Runway Incursion Prevention) , and in particular for the apron area . Parallel to this the SESAR consortia targets for total risk mitigation in the ATM domain by the factor 10 and, therefore, also addressing the movement area including the apron.

To achieve an effective reduction of risk for operations on the apron the authors aim at establishing a constant, appropriate picture on the side of the apron controller, since he represents the central authority to create and maintain operational apron safety. In order to implement this approach three dimensional (3D) sensor data from LiDAR was found to be the most promising candidate to meet these new requirements regarding information quality and quantity.

LiDAR is a Laser-based method which measures distances between the sensor and any reflecting object. In conjunction with an efficient data processing current LiDAR devices for solid target detection have the unique capability to detect unknown, very small objects on the floor (like FOD) and at the same time they are able to classify known, rather larger objects (e.g. class “Aircraft”) from distances of up to several hundred meters. These capabilities are achieved by the following specific LiDAR characteristics: Non-cooperative environmental scanning, high pulse repetition rates with high pulse intensities, pulse frequencies reaching into petahertz range, high precision and accuracy in millimeter range for details see. The above characteristics also result in an independency from light conditions (day/night) and in a reduced sensitivity against adverse weather conditions compared to the human eye and standard video cameras.

The chosen risk mitigation approach foresees to overcome an insufficient picture by providing information on present or emerging hazards to the controller. Based on this information the controller shall be enabled to take corrective actions in time so as to avoid or at least manage hazardous situations. Visual indicators that represent typical causes of an emerging hazard or that make an already existent hazard visible will be content of this information.

In the first development stage of the concept implementation, a simple visual presentation of these indicators at the default apron Controller Working Position (CWP) is envisioned. At a later stage, automated hazard pattern recognition interprets these indicators based on model-knowledge to assist the controller. Independent from the stage of development, the concept relies on LiDAR 3D point data and requires working object detection, object classification and object tracking functions.

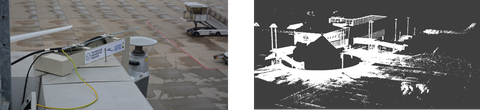

left: LiDAR sensor at Dresden airport; right: LiDAR raw data point cloud

Relevant PUBLICATIONS

- J. Mund, P. Latzel and H. Fricke (2016): Can LiDAR Point Clouds effectively contribute to Safer Apron Operations?, SESAR Innovation Days (SID), Delft, The Netherlands

- J. Mund, F. Michel, F. Dieke-Meier, H. Fricke, L. Meyer and C. Rother (2016): Introducing LiDAR Point Cloud-based Object Classification towards Safer Apron Operations, International Symposium on Enhanced Solutions for Aircraft and Vehicle Surveillance Applications (ESAVS), Berlin, Germany

- J. Mund, A. Zouhar, L. Meyer, H. Fricke and C. Rother (2015): Performance Evaluation of LiDAR Point Clouds towards Automated FOD Detection on Airport Aprons, International Conference on Application and Theory of Automation in Command and Control Systems (ATACCS), University Paul Sabatier (IRIT), Toulouse, France

- J. Mund, L. Meyer and H. Fricke (2015): Einsatz von LiDAR-Sensorik zur Risikominderung auf dem Flugplatzvorfeld, Ingenieurspiegel, 03/2015

- J. Mund, L. Meyer and H. Fricke (2014): LiDAR Performance Requirements and Optimized Sensor Positioning for Point Cloud-based Risk Mitigation at Airport Aprons, International Conference on Research in Air Transportation (ICRAT), Istanbul, Turkey

- L. Meyer, J. Mund, B. Marek and H. Fricke (2013): Performance test of LiDAR point cloud data for the support of apron control services, International Symposium on Enhanced Solutions for Aircraft and Vehicle Surveillance Applications (ESAVS), Berlin, Germany

RELEVANT DIPLOMA AND PROJECT THESES

- Master Thesis: Safety Assessment of automated surveillance functions for a LiDAR based Ground Monitoring System for Apron Controller

- Diploma Thesis: Experimental Quantification of LiDAR detection capabilities for airport apron surveillance purposes

- Diploma Thesis: Evaluating the potential of LiDAR point cloud-based information for risk mitigation on the airport apron by analyzing experimental data from a HITL simulation study

- Project Thesis: Identification and evaluation of sensor systems for the surveillance of ground-based operations at the airport

- Project Thesis: Identification of hazard and cause indicators related to acitivites on the airport apron

- Diploma Thesis: Identifiying and validating the potential of LiDAR sensing for an effective turnaround monitoring

- Project Thesis: Development of a test design for the potential analysis of point cloud-based information for risk mitigation on the airport apron

- Project Thesis: Development of target criteria and identification of boundary conditions to evaluate sites for a LiDAR sensor in the context of apron surveillance

- Project Thesis: Conception of a point cloud-based, visual human-machine interface at the example of the apron control of Dresden airport

- Project Thesis: Modeling of apron control activities for a qualitative analysis of the influence of erroneous information perception on the decision-making of apron controllers

- Diploma Thesis: Development of a physical beam grid model for 3 D scenery scanning using a Velodyne HDL 64 LIDAR

- Diploma Thesis: Bewertung von Sensorstandorten von LiDAR Überwachungstechnik für die optimale Objektdetektion auf dem Standplatz eines Flugplatzvorfeldes (diploma thesis, 2013)

- Diploma Thesis: Bewertung von LiDAR Sensorstandorten für eine innovative Vorfeldkontrolle am Beispiel Flughafen Dresden mittels Feldtest

- Diploma Thesis: Experimentelle Ermittlung der Diskriminierungsgüte von LiDAR Sensortechnik für den Einsatz bei der Vorfeldkontrolle eines Flughafens

- Project Thesis:Bewertung von Sensortechnik für den sichtgestützten Bodenrollprozess eines unbemannten Luftfahrzeuges