LiDAR II

Safe apron operation through automated hazard detection using a (weather-) robust LiDAR (Light Detection And Ranging) -object recognition system.

Project information

- Client: DFG - German Research Foundation

- Duration: July 2018 - March 2022

- Follow-up project of the DFG-funded project LiDAR I (FR 2440 / 4-1)

In recent decades, the level of automation in airspace monitoring and control has steadily increased both onboard and ground-level (e.g., Airborne Collision Avoidance Systems). With the exception of the multisensorial Advanced - Surface Movement Guidance and Control System (A-SMGCS) systems, which were previously only fully equipped at a few large airports, the current level of automation in apron control remains comparatively low. On the one hand, the reasons for this are to be found in the system-immanent complexity and variety of objects and processes on the apron, which generally hinders the (automated) monitoring of these areas even with A-SMGCS support. On the other hand, a machine can only effectively execute a monitoring and controlling task if the quality of the input information is adequate, which, however, can not yet be ensured with the aid of conventional sensor technology. In the light of the SESAR demand for increased automation in the ATM system and increasingly powerful sensor systems, apron control shows potential for increased operational safety. Automated assistance functions for interest-related information visualization, attention control and alerting, prediction of future conflicts as well as decision support for conflict detection and resolution can be realized. In terms of human information processing, these functions are expected to reduce the cognitive complexity of controlling the airplane on the ground, with improved task prioritization and execution, while reducing workload.

The follow-up project LiDAR II is intended to create the fundamentals and techniques for the automatic interpretation of complex dynamic apron scenes in LiDAR data under difficult weather conditions to analyze object interactions, which go beyond the previous object detection, classification and instance recognition. Following the previous research work, a LiDAR based surveillance system shall be developed which supports the apron controller by displaying potentially dangerous situations in apron scenes using typical visual characteristics.

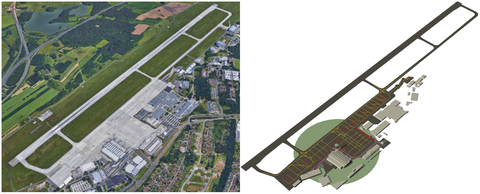

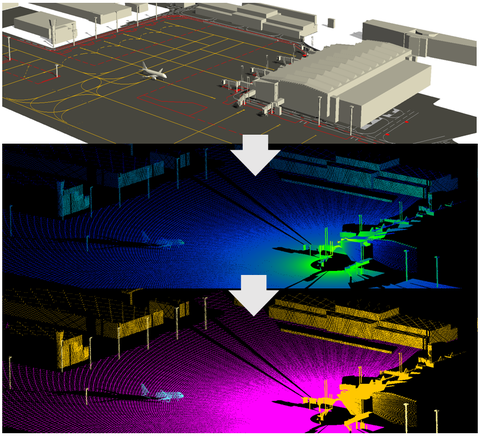

State-ofthe-art algorithms in LiDAR semantic segmentation heavily rely on large-scale data sets with fine-grained labels. Although some hand-labeled data sets are publicly available, the point-wise annotation of 3D point clouds requires painstakingly work that is extremely cumbersome. Consequently, we propose a simulation-based approach to generate synthetic training data of the apron using a virtual airport environment that integrates a LiDAR sensor model. Accurate, true-to-scale 3D modeling of the airport environment is a key building block to simulate realistic apron operations. To that end, we construct a 3D model of Dresden Airport using Computer-Aided Design (CAD) Software.

In this way, arbitrary scenarios captured under different operational conditions including static objects and moving aircraft provide labeled point data. This way, we trained and tested successfully a LiDAR semantic segmentation model emphasizing aircraft approaching/leaving the gate after arrival/departure, thin structures (poles), airport buildings, and ground-plane. The developed technique provides an important baseline for the expected performance of the trained model on real data. We believe that the resulting framework provides additional visual cues capturing relevant semantic information that potentially assist the controller in complex situations.

The modular simulation environment integrates a LiDAR sensor model of the sensor installed at Dresden Airport, physical properties of the environment, detailed 3D models of the scene1), and kinematic models of dynamic objects. It is suitable for integrating further sensor types and offers the possibility to identify optimal sensor positions at the airport.

The simulation environment is able to automatically generate large amounts of training data (simulated LiDAR point clouds and corresponding label information) of static and dynamic airport scenes. The data obtained can be used to train systems that will enable the automated detection of (also non-cooperative) objects at the airport, the analysis of their movements, and the automated detection and prediction of potentially dangerous situations.

1) Moveable 3D models were kindly provided by TRANSOFT Solutions

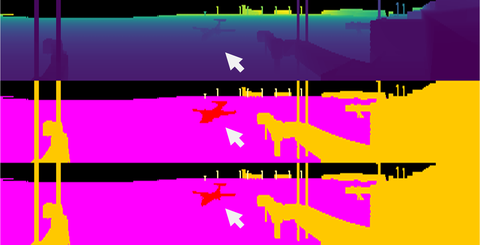

The semantic segmentation of LiDAR point clouds is a rapidly developing field in computer vision and machine learning. In the meantime there exists a large variety of efficient algorithms for this task using deep learning. In our work we employed RangeNet++ [1] which outperformed existing algorithms in terms of accuracy and inference time. The representational capabilities of the chosen method are particularly important in order to be able to resolve small, thin structures (e.g., poles), subtle shape differences (e.g., between different types of aircraft) and the intended direction of movement of aircraft and other movable objects. Please refer for example to [2,3] for the most recent state-of-the-art in semantic segmentation of LiDAR point clouds.

Specifically, RangeNet++ transforms input point clouds to spherical range images that are processed by a deep 2D CNN using planar convolutions. The resulting pixelwise labels are reprojected to the 3D point cloud followed by a fast k-nearest neighbor postprocessing step that resolves potential label inconsistencies in 3D.

We have shown that we are able to reliably segment and label the objects of interest in simulated scenes using a single LiDAR sensor that already covers a large portion of the airport infrastructure including the apron. Specifically, for moving objects that are subject to a short holding time the segmentation model was able to consistently recover the fine-grained semantic distinction between object instances (A320, Q400) and their intended directions of movement. The trained segmentation model was also able to recognize static objects of the airport infrastructure with nearly 100% accuracy using arbitrary scanning durations of LiDAR. Moreover, even on our non-optimal hardware the inference time of the semantic segmentation model is less than 2 s for large point clouds containing over 6 million points. In our experiments, we also observed that the segmentation model was always able to consistently detect aircraft instances without considering the type and the intended direction of movement. From this, we conclude that it is generally possible to derive the precise position of an aircraft from a labeled point cloud.

Our experimental evaluation suggests that the generation of synthetic LiDAR scans as well as the training of the semantic segmentation model work very well and may readily be applied to any airport. The proposed semantic segmentation framework provides an important contribution to surveillance since it potentially assists the ATCo with additional visual cues capturing the local traffic situation.

Our framework also provides the foundation for the implementation of automated functions that make airport operations safer, e.g., the timely detection of potential conflicts and incidents between moving objects, the detection of FOD, speed monitoring as well as Runway Monitoring and Conflict Alerting (RMCA).

Finally, we would like to mention that it is generally possible to derive operationally relevant information from a labeled point cloud including the AOBT, AIBT, ATOT, and ALDT.

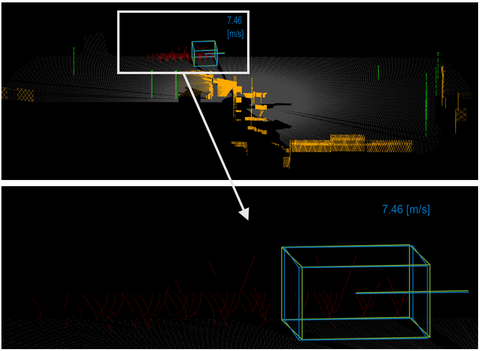

In addition, we developed a detection and tracking method for fast-moving, non-cooperative objects in semantic LiDAR scans using our virtual airport environment. Our method integrates a novel sampling strategy into a basic Kalman filter that exploits the scanning principle of the LiDAR sensor to select points from sparse, deteriorated point sets as a function of the velocity of the scanned object and its distance from the sensor. Experimental results on simulated data demonstrate that our proposed strategy performs within or close to the position measurement and velocity measurement limits set by the A-SMGCS requirements even by using just one sensor and additionally also for non-cooperative objects. Limitations of the approach mainly result from self-occlusions of the scanned surfaces which we plan to address in our future work, e.g., by integrating multiple LiDAR sensors into the airport environment.

[1] A. Milioto and I. Vizzo and J. Behley and C. Stachniss, RangeNet++: Fast and Accurate LiDAR Semantic Segmentation, IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) 2019

[2] J. Behley and M. Garbade and A. Milioto and J. Quenzel and S. Behnke and C. Stachniss and J. Gall, SemanticKITTI: A Dataset for Semantic Scene Understanding of LiDAR Sequences, in Proc. of the IEEE/CVF International Conf.~on Computer Vision (2019)

[3] A. Geiger and P. Lenz and R. Urtasun, Are we ready for Autonomous Driving? The KITTI Vision Benchmark Suite, in Proc.~of the IEEE Conf.~on Computer Vision and Pattern Recognition (CVPR) (2012)

During the project the following results were published:

- Johannes Mund, Alexander Zouhar and Hartmut Fricke (2018): Performance Analysis of a LiDAR System for Comprehensive Airport Ground Surveillance under Varying Weather and Lighting Conditions (ICRAT 2018), Barcelona, Spain

- Hannes Brassel, Alexander Zouhar and Hartmut Fricke (2019): Validating LiDAR vs. Conventional OTWV/CCTV surveillance technology for safety critical airport operations (SID 2019), Athens, Greece

- Hannes Brassel, Alexander Zouhar and Hartmut Fricke (2020): 3D modeling of the airport environment for fast and accurate LiDAR Semantic Segmentation of Apron Operations (DASC 2020), San Antonio (virtual conference), Texas, US

- Hannes Brassel, Alexander Zouhar and Hartmut Fricke (2020): Adaptive point sampling for LiDAR-based detection and tracking of fast-moving vehicles using a virtual airport environment (SID 2020), virtual conference

Completed work:

- DA (2019): Performance analysis of object recognition and comparison using LiDAR sensors, conventional surveillance technologies and human vision

- FP (2019): Development of a model to predict aircraft taxiing on the apron

- StA (2019): Specification of visual patterns for an automated LiDAR based hazard detection system at Dresden Airport

- FP (2020): Pose estimation and tracking of taxiing aircraft in labelled LiDAR point clouds of the airport apron

- MA (2020): Quantitative motion prediction of dynamic objects at the airport using rule-based motion prediction methods

-

DA (2020): Development of a precipitation model for the LiDAR simulation environment

In progress:

Contact

© Sven Ellger

© Sven Ellger

Research Associate

NameDr.-Ing. Hannes Braßel

Send encrypted email via the SecureMail portal (for TUD external users only).

Chair of Air Transport Technology and Logistics

Visiting address:

Gerhart-Potthoff-Bau (POT), Room 166 Hettnerstraße 1-3

01069 Dresden